“Nothing about history is pre-ordained or inevitable: historical outcomes depend on unrelated decisions that are taken at various points in time. The job of the historian is to identify the factors that are considered most influential in explaining particular events in history. Different historians will have different perspectives on the things that are most important in explaining these historical outcomes.”

This was the preamble to a very interesting radio discussion on the history of sport. It was a kind of ex-ante justification for excluding some apparently important sporting history from a 30 minute radio programme. It occurred to me that this historian’s personalised approach to telling the history of sport sharply contrasted with the “detached” technical way that we are expected to carry out most of our complex or strategic evaluations.

We evaluate complex multi-billion euro development programmes, involving hundreds of interventions in some of the poorest and most complex environments in the world. As evaluators, we are being asked, in effect, to tell our own history of that programme: What happened? What worked and why? What were the key factors that affected the success or failure of the programme in various country contexts? What lessons can be learned for the future?

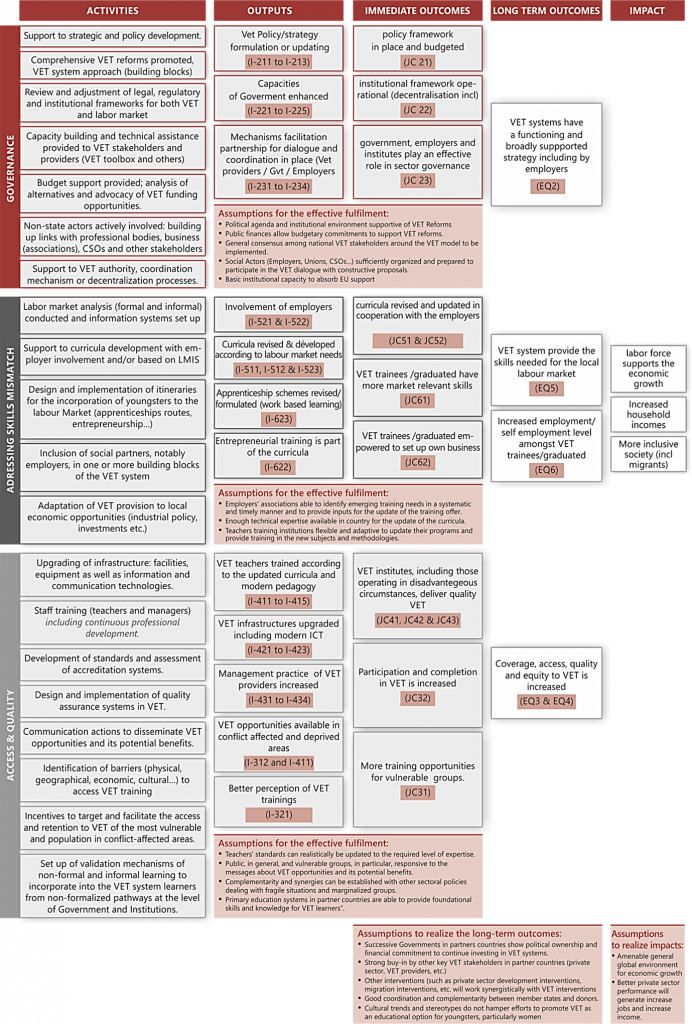

Most programme evaluations of this kind are now “theory-based”. Theory-based evaluations test the hypothetical links between the “inputs” provided by the programme donor and the eventual outcomes and impacts that the programme was aiming to achieve. These hypothetical links are illustrated in a so-called Theory of Change.

While most donor programmes describe the problem that it wishes to address and define the “results” that the intervention is expected to achieve, it is quite unusual to find any explicit ex-ante presentation of the theoretical chains of causation that are expected to lead to desired programme results. A key starting point for many theory-based evaluations therefore is the reconstruction of a Theory of Change. This involves a review of the (usually limited) programme documentation that has been collected in the early stages of the project but, in practice, it often relies heavily on interviews with key interlocutors who were involved in programme design and/ or implementation.

Basing any evaluation on the ex-post creation of a story of expected causality has obvious and inherent risks of hindsight bias. It also risks the exclusion of elements of the story that would have been considered important when the programme was being developed but which, for various reasons (including initial programme failures), may have become less important over time.

Theory-based evaluations require the evaluator to focus his/her investigations on the specific chains of causality that are presented in the Theory of Change. If these chains of causality are biased in favour of the linkages that seem strongest after the event, great opportunities for learning may be lost.

Another problem with the Theory of Change approach as it is often practiced, is the pre-determination of the pieces of evidence that are to be used in answering particular evaluation questions. In many evaluations, an important first step is the identification of “judgement criteria” and “indicators” that are to be used in answering particular evaluation questions. In many cases, these judgement criteria and indicators are subject to intense discussion and debate with project managers in the client organisation. This, in itself, presents a certain threat to the independence of the evaluation and risks creating further biases in the direction that the research is taking.

Even if such biases can be avoided, the process of forcing evaluators to collect evidence on pre-determined issues in this way reduces the likelihood that interesting or unexpected insights will be produced by the evaluation. When we require that an evaluation question is to be answered using particular pieces of evidence that are expected to be important, then of course the answer to that evaluation question is more likely to be the one that we expected.

Another problem with the theory-based approach is that it tends to be built on simplistic linear chains of causation. However, just as our sports historian talked about the sometimes complex sequences of events that lead to particular events in history, development work that aims to affect complex social phenomena like poverty, economic fragility and governance is not really predisposed to linear explanations.

To exacerbate the problem of linear causation, the theory that we are testing in our evaluations often contains an implication that all change revolves around the donor and its programme, rather than around a range of interrelated contextual factors, of which its programme plays a part. In some cases, the donor programme is seminal; in many other cases, it simply isn’t. Where the donor’s input is decisive, linear chains of causation can be more easily observed. In the more usual scenario, however, a solid explanation of what has worked and why demands a much greater understanding of events that occur outside of the programme.

While it is reasonable and right to impose some methodological structure on important evaluation exercises, this should not work as a strait-jacket that limits learning opportunities or the generation of new knowledge. Just as our sport historian had the freedom to highlight particular historical events that he considered important from his own qualified (but personal) perspective, a good evaluation exercise should leave sufficient room for specialists to examine a programme from different points of view and, in particular to investigate issues which lie outside of the theory of change, if these turn out to be important. Like history, international development outcomes are not easy to predict or neatly explained in simplified theoretical constructs.

This article was written by GDSI Managing Director Pauric Brophy.